With the development of computer science and automatic control technology, more and more different types of intelligent robots are appearing in factories and lives. As an important subsystem in intelligent robot systems, robot vision systems are also receiving more and more attention. . It involves the fields of image processing, pattern recognition and visual tracking. The focus of different types of robots is different, and its visual system has subtle differences in software or hardware. This paper studies the monocular vision system based on the service robot. It processes two-dimensional images and is based on the recognition of the color and shape of unobstructed objects and the translational tracking of 3D target objects.

The vision system is a very complicated system, which not only needs to accurately collect images but also to achieve real-time response to changes in the outside world, and it also needs to track the targets of the outside movement in real time. Therefore, the vision system puts forward higher requirements for both hardware and software systems. At present, the more popular football robot technology, its visual system belongs to a more typical type of rapid recognition and response. Under normal circumstances, it is to achieve the identification of players and targets through the method of color mark calibration, and to achieve the tracking function of targets by extending the prediction function of the Kalman filter. On the hardware, an off-the-shelf camera is used to realize a robot image acquisition system.

This system uses a CMOS image sensor instead of a CCD type sensor to collect images. The DSP processing chip TMS320VC5509A is used for image processing and as a CPU control. In the design process, in order to visualize the recognition and tracking effects of the robot vision system, a special TFT format liquid crystal to visually display. In the software, the visual technology of a part of soccer robots is used to achieve the rapid recognition of the target, and the Jacobian matrix constructed by the global characteristic moment achieves the adaptive tracking of the target.

1 Hardware design

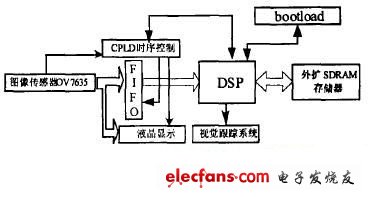

Figure 1 is a functional block diagram of the system hardware circuit.

1.1 Image acquisition

The vision lens images the external image information on the area array unit of the image sensor. At present, there are two popular image sensors: area array CCD (Charged Coupled Device) type and area array CMOS. Compared with the CCD type image sensor, the active pixel unit of the CMOS image sensor provides an amplifier for each pixel, requiring only a single power supply and low logic level voltage, and the power consumption is only one tenth of the CCD. A / D conversion part is integrated inside the CMOS image sensor, which directly outputs digital signals. Based on these factors, this system uses the CMOS color image sensor OV7635 from Omnivision.

The resolution of OV7635 is 640X480, which can output 8-bit data in 3 formats: YCbCr4: 2: 2 mode, RGB4: 2: 2 mode and RGB raw data mode. The output VGA format can reach a maximum of 30fps (fps: frames per second). Can work under progressive scan and interlaced scan. OV7635 has two working modes: master mode and slave mode. In master mode, the synchronization signal and clock are not controlled by the peripheral device. In the slave mode, the field synchronization signal VSYNC of the OV7635, the line synchronization signal HREF, and the crystal frequency XCLK of the system are all controlled by external devices. This system uses the main mode. OV7635 configures on-chip registers through the I2C bus to enable it to output raw data. After the system is powered on and reset, the CMOS register is initialized by the I2C bus signal of the DSP chip. Then the OV7635 outputs the image signal as required. It includes a line synchronization signal HREF, a field synchronization signal VSYNC, a pixel clock signal PCLK, and a digital image signal.

1.2 LCD display

In order to intuitively see the recognition and tracking effect of the visual system on people, a PT035TN01 LCD screen of INNOLUX was used. In order not to increase the burden on the DSP, but also in order to see the tracking effect on the external target object in real time, the data displayed on the liquid crystal display is directly displayed through the image data signal output by the sensor OV7635 and the CPLD control timing without going through the DSP. PT035TN01 is a 3.5-inch TFT format liquid crystal with a resolution of 320 & TImes; 3 (RGB) & TImes; 240. The two input control pins of liquid crystal IF1 and IF2 select the input data format: serial RGB format, CCIR601 format, CCIR656 format. There are 4 scanning modes for LCD. The input data format adopted by this vision system is CCIR601 format, and the scanning mode is from top to bottom and left to right.

In the CCIR601 format, the pixel clock PCLK output by the image sensor is used as the working clock of the liquid crystal by the CPLD frequency divider, and the line sync signal HREF output by the image sensor is processed by the CPLD as the line sync signal HIS of the liquid crystal. In this way, under CPLD control The data signal output by the image sensor OV7635 is sent to the liquid crystal for display.

1.3 Timing control

The field synchronization signal VSYNC, the line synchronization signal HREF and the pixel clock signal PCLK output by the OV7635 are connected to the CPLD chip, and the control signal is generated to store the data signal output by the OV7635 in the FIFO frame memory AL422B. Display. CPLD adopts EPM7064 chip of ALTERA Company. In CPLD, the functions of writing control to the FIFO, informing the DSP to read the signal, and generating the clock signal of the liquid crystal are completed.

The CPLD receives the field synchronization signal VSYNC. The falling edge of this signal indicates the start of a frame output by the image sensor. At this time, the CPLD generates a WRST negative pulse to reset the write pointer of the FIFO. After the falling edge of the field synchronization signal VSYNC, it is judged that the rising edge of the line synchronization signal HREF comes, and then the pixel clock signal PCLK is used as the write clock WCK to store the image data directly in the FIFO. When a certain number is stored, it sends a signal in time DSP, so that DSP can read data, this system uses an interrupt INT0 to notify DSP. At this time, DSP can read data or not, depending on the speed of processing. When reading data, RD and chip select can be used to generate RCK signal. The reading speed of the DSP cannot be too fast, and the reading speed is less than the writing speed.

In the logic timing control of the liquid crystal, the signal output from the image is 640 & TImes; 480 pixels, and the liquid crystal display is 320 & TImes; 240 format. Therefore, the CPLD is used to divide the pixel clock signal PCLK input by the image sensor by two to generate the liquid crystal clock signal to control the display of the liquid crystal, and the line synchronization signal is interlaced effectively to achieve the liquid crystal display of the image. The programming in CPLD uses the hardware description language VHDL, which is written on the QUARTUSâ…¡ software platform. Because the 44-pin PLCC package of the EPM7064S series is used in the chip selection, it can only work in the case of 5V voltage, and the high-level signal output is 5V, which must be processed to access the system and work in 3.3V state. Chip device.

1.4 Frame memory selection

The frame memory has a RAM that needs an external address line and a FIFO that does not need an external address line, in order to simplify the design of the CPLD. Frame memory using FIFO. FIFO can be divided into DRAM based on dynamic storage and SRAM based on static. The advantage of static SRAM is that it does not need to refresh the circuit, but the capacity is small, and it takes multiple pieces to store one frame of data; the advantage of DRAM is that the capacity is large, only One frame can store one frame of data, the disadvantage is that there must be a refresh circuit. This design uses Averlogic's large-capacity FIFO dynamic memory chip AL422B. Its refresh circuit is relatively simple, only need WCK or RCK to provide uninterrupted pulses greater than 1M. The storage capacity of AL422B is 3MB. Since the information of one frame of the system usually contains 640 × 480 color pixels, each pixel occupies 2 bytes. It can store the complete information of one frame of image, and its working frequency can reach 50MHz.

1.5 Video processing DSP

When choosing DSP, in consideration of processing speed, storage capacity, processing technology level under existing conditions, and cost-effectiveness, I chose TI's 144-pin package TMS320VC5509A. The maximum operating frequency of this chip can reach 200MHz, which is very high Processing speed.

After receiving the read notification signal from the CPLD, the DSP starts to read the video data in the AL422B. In order to facilitate data processing, an SDRAM is expanded on the periphery of the DSP. The chip uses HY57V161610E from HYNIX, and the storage capacity of this chip is 1M × 16bits.

After the DSP is powered on and reset, by sampling the state of GPIO0 ~ GPIO3, how to load the program according to the sampled state. In this system, the SPI port of the external flash memory chip is used to load the program to the DSP, and then the register of the image sensor is initialized through the I2C port of the DSP. The image sensor starts to output signals. The whole system started to work.

As a high-speed processor, DSP is mainly used for image processing. Because the vision system has to complete the recognition and tracking functions, the amount of data processing is very large. While completing image processing, DSP is also used as a controller to complete the control of the controller, thereby forming a visual tracking system.

2 Software design

Because the system uses a combination of color and shape to identify unobstructed target objects. In order to achieve the purpose of real-time and fast robot, the software method mainly uses the color recognition method of the football robot currently commonly used. At present, the color judgment method based on the threshold vector is more common. The principle of color recognition is briefly described below.

2.1 Color space selection

When using the method based on color image segmentation to identify the target, we must first select the appropriate color space, commonly used color spaces are RGB, YUV, HSV, CMY, etc. The choice of color space directly affects the effect of image segmentation and target recognition.

RGB: is the most commonly used color space, where the brightness is equal to the sum of R, G, and B components. The RGB color space is a non-uniform color space. The difference in perception between the two colors is not linearly proportional to the Euclidean distance between the two points in the space, and the correlation between the R, G, and B values ​​is high. For the same color Attributes, under different conditions (light source type, intensity, and object reflection characteristics), the RGB values ​​are very scattered. For identifying a specific color, it is difficult to determine its threshold and its distribution range in the color space. Therefore, the color space from which the luminance component can be separated is usually selected, among which the most common are the YUV and HSV color spaces.

HSV: Close to the way the human eye perceives color, H is Hue, S is Color Saturation, and V is Value. Hue H can accurately reflect the color type, and has low sensitivity to changes in external lighting conditions, but H and S are non-linear transformations of R, G, and B, and there are singular points. Even near the singular points, the values ​​of R, G, and B A small change also causes a large jump in the transformed value.

YUV: The luminance-color space where the RGB color space changes linearly. It is proposed to solve the compatibility problem of color TV and black and white TV. Y stands for brightness (Luminance), and UV stands for color difference (Chrominance). The importance of YUV notation is that its luminance signal (Y) and chrominance signal (U, V) are independent of each other. The so-called color difference refers to the difference between the three component signals (ie R, G, B) and the luminance signal in the primary color signal.

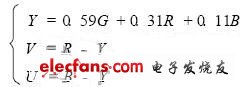

Therefore, for the above reasons, this system is using YUV color space.

The YUV format and RGB have the following relationship:

2.2 Threshold determination and color judgment

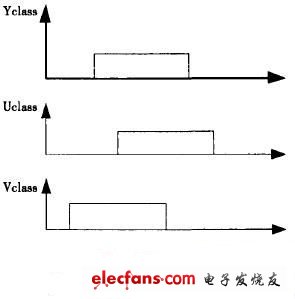

When determining the threshold, first train by collecting samples to obtain the upper and lower thresholds of the components of the predetermined colors in YUV space, as shown in FIG.

When the position of a pixel to be determined in the color space falls in this cuboid, it is considered that the pixel belongs to the desired color, thereby completing the identification of the image color. In the Y space, the Y value represents the brightness, because it varies greatly, so only the values ​​of U and V are considered. When performing color judgment, the threshold vectors of U and V are first established respectively.

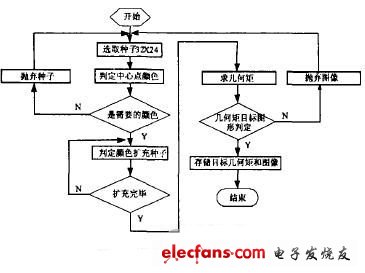

Since the digital signal of the image sensor in the system is 8 bits, that is, 1Byte, a total of 255Byte, the system can judge up to 8 colors. Image segmentation is performed after color recognition, and a seed filling algorithm is used in the image segmentation. The entire seed is filled at the same time as the color of the pixels. At the beginning, not all pixels are processed, but blockwise. The block used by this system is 32 × 24 pixels, which greatly reduces the amount of calculation. When the center point is the color to be identified, the point is used as a seed to spread around, and the color of the surrounding pixels is determined until the entire block is filled. During this process, shape recognition is performed on the target at the same time. This system uses a global feature vector recognition algorithm for recognition. At the same time, the required moment feature quantity is also obtained for constructing the Jacobian matrix. Figure 3 is a flowchart of image recognition segmentation.

Headband Headphones have good sound field and are comfortable to wear, Bluetooth Headband Headphones can avoid scratching the ear canal; the sound quality of high, medium,and bass are very good, have a good sense of hearing even when playing symphony; The midrange effect of the headphones is thick, pop songs' hearing effect is very contagious, music effect is great.

Advantages:

1: Sound field is good, comfortable to wear.

2: No in-ear, avoid scratching the ear canal.

3: longer time to use, compare to in-ear earphone.

Headband Headphones

Headband Headphones,Wireless Headband Headphones,Headband Earphones,Bluetooth Headband Headphones

Shenzhen Linx Technology Co., Ltd. , https://www.linxheadphone.com